Introduction

If you’ve ever built or deployed an MCP (Model Context Protocol) server, one question probably crossed your mind early on:

Where exactly should I host my MCP server?

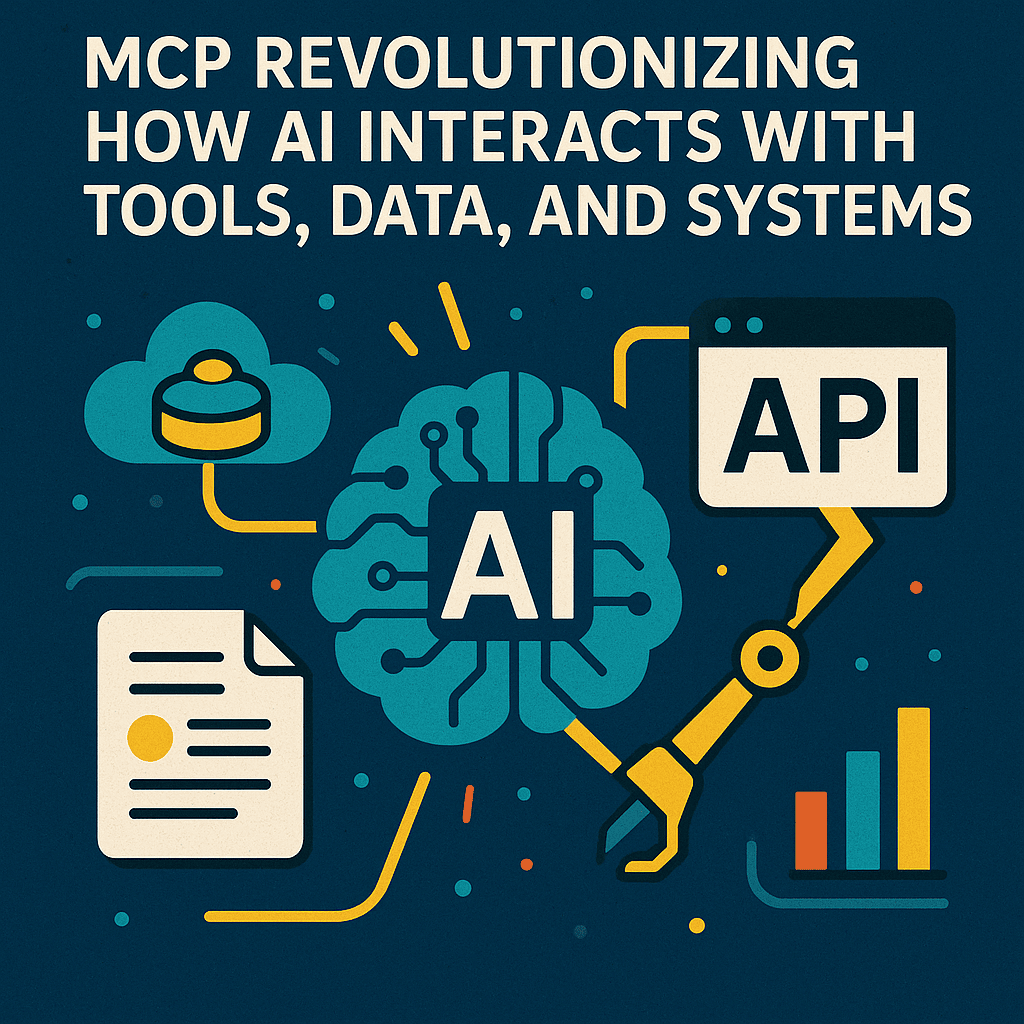

It’s a fair question, because MCP isn’t your typical API framework or static app. It’s a living, context-sharing bridge between AI models, data sources, and tools.

Unlike traditional APIs that just sit behind a REST endpoint, MCP servers are dynamic. They need to be reachable, secure, and low-latency, all while handling real-time model-to-tool communication.

So, let’s break it down.

We’ll explore where MCP servers can be hosted, how modern cloud infrastructure supports them, and why MCPfy’s single-click hosting is redefining what “easy deployment” means for the MCP ecosystem.

1. How Modern Hosting Works

Before diving into MCP specifically, let’s take a step back.

Most modern AI or microservice workloads are hosted in one of three environments:

- Cloud servers (AWS, Google Cloud, Azure) – Full control, scalability, but with setup complexity.

- Serverless platforms (Cloudflare Workers, Vercel, Fly.io) – Instant deployment, auto-scaling, minimal ops.

- Local or edge environments – Great for testing, private data, or latency-sensitive applications.

Each has tradeoffs.

But MCP brings a new dimension, because it’s not just about where the code runs, it’s about how efficiently the model can access the context it needs.

2. What Makes Hosting MCP Servers Unique

MCP servers are designed to be lightweight, modular, and transport-agnostic.

That means you can host them:

- On a dedicated cloud VM

- Inside a serverless function

- Or even locally, for development and private workflows

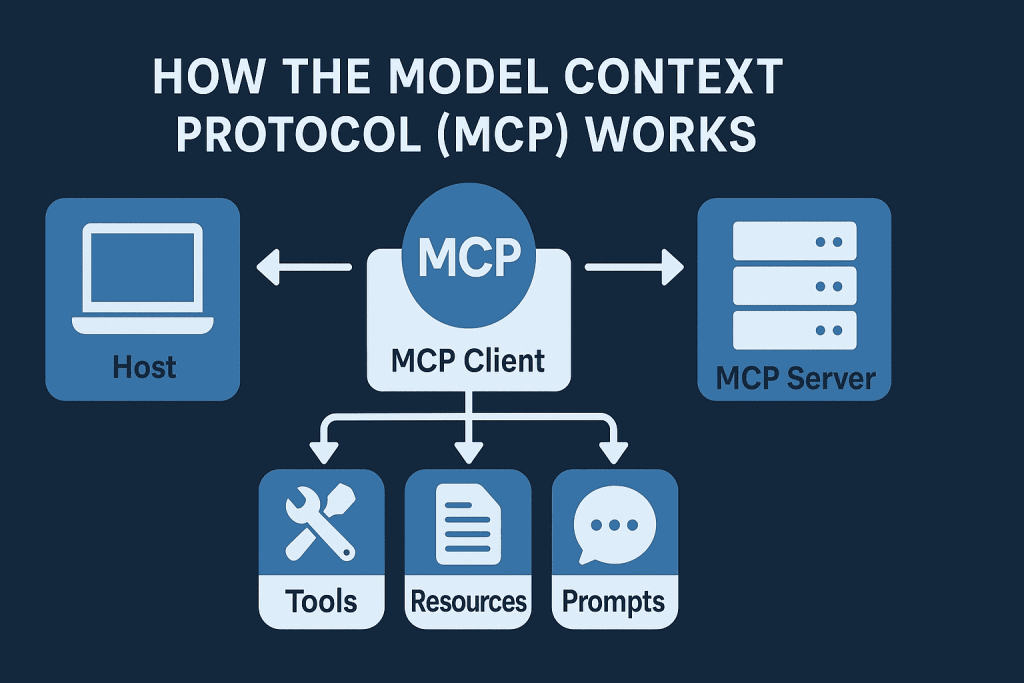

But here’s what sets MCP hosting apart from a typical REST API deployment:

- MCP servers maintain persistent tool definitions, these need stable endpoints.

- They handle bi-directional model communication, often via streaming HTTP.

- They may serve multiple clients or AI models at once, requiring concurrency control and secure access.

So while hosting an MCP server isn’t complex, it demands precision, a deployment that’s fast, secure, and compatible with any AI model.

3. Hosting Options for MCP Servers

Let’s look at the three most common hosting scenarios and, where MCP fits in each.

A. Hosting MCP Servers on Cloud Platforms

You can deploy MCP servers on any major cloud provider: AWS, Google Cloud, Azure, or DigitalOcean.

Typical setup:

- Deploy via container (Docker or Kubernetes)

- Use a reverse proxy like Nginx or Caddy

- Expose HTTPS endpoints for secure communication

Pros:

- Full customization and scaling control

- Easy integration with enterprise-grade data sources

Cons:

- Configuration overhead

- Requires DevOps experience

This option is great for organizations that already manage their infrastructure and want MCP to integrate deeply with existing systems.

B. Hosting MCP on Serverless Platforms

Platforms like Cloudflare Workers, Vercel, and Fly.io have changed the game.

They allow developers to deploy applications with zero infrastructure management.

Hosting an MCP server on these platforms is ideal if you need:

- Low-latency responses

- Global edge deployment

- Instant scalability

Example:

A lightweight MCP server that connects to a remote database or CRM tool can be deployed as a Cloudflare Worker in under 30 seconds.

The model calls your worker endpoint; the worker interprets the request, fetches data, and returns structured context — all serverlessly.

Pros:

- Near-instant setup

- No infrastructure maintenance

- Built-in security and DDoS protection

Cons:

- Execution time and memory limits (depending on platform)

- Limited persistent storage (but ideal for stateless MCP calls)

C. Local and Edge Hosting

Sometimes, you want your MCP server close to the data, not in the cloud.

That’s where local and edge hosting shines:

- Ideal for enterprise data compliance

- Perfect for testing or private workflows

- Can be paired with VPNs or firewalls for restricted access

For example, a hospital could run an MCP server on-premise to expose anonymized medical insights to an AI model, without ever sending data to the public cloud.

MCP’s lightweight architecture makes that entirely possible.

4. The Challenges of Self-Hosting MCP

Let’s be honest, while it’s exciting that MCP can run anywhere, hosting it manually isn’t always smooth.

You might face:

- SSL and reverse proxy setup issues

- Network routing or authentication mismatches

- Debugging model connections across environments

And for developers who just want to build, not babysit servers, all that setup can kill the flow.

That’s exactly the problem MCPfy was built to solve.

5. The MCPfy Advantage | Single-Click Hosting

Here’s where we simplify everything.

With MCPfy, hosting an MCP server doesn’t require DevOps, SSL certs, or YAML files.

You can literally go from zero to deployed in a single click.

How it Works

- Log in to your MCPfy dashboard.

- Click “MCP Server.”

- Choose your configuration (or use presets).

- Instantly get a secure, public endpoint with built-in authentication and monitoring.

Your MCP server is deployed, verified, and ready to use. No complex setup, no hidden configuration.

It’s hosting as it should be: fast, intuitive, and developer-friendly.

Why It Matters

Developers shouldn’t have to think about:

- SSL

- Reverse proxies

- Network isolation

- Cloud billing surprises

They should focus on what MCP was meant for connecting AI models with tools and context.

MCPfy makes that possible.

It abstracts the hosting layer so you can start building faster and, still scale globally when you’re ready.

6. Where MCP Is Headed Next

The future of MCP hosting is moving toward hybrid architectures, where:

- Core MCP servers run on edge networks (for low latency)

- Sensitive MCP instances stay local (for compliance)

- Managed hosting platforms like MCPfy handle orchestration and scaling

Imagine deploying a global AI context layer, without writing a single deployment script.

That’s the kind of world MCPfy is enabling.

Conclusion

Where is MCP hosted?

The simple answer: anywhere you want.

From local servers to global cloud workers, MCP adapts to whatever environment your model needs.

But if you want to skip the complexity and start building immediately, MCPfy lets you host your MCP server in a single click.

No ops, no downtime, no headaches.

Just pure, context-driven AI infrastructure — ready to scale.