Introduction

The Model Context Protocol (MCP) has changed the way AI systems connect, communicate, and operate.

If you’ve ever wondered why MCP exists, who created it, or why it’s such a big deal, this is your one-stop guide.

In this article, we’ll explore the complete journey, from the early days of MOP (Model Object Protocol) to the rise of MCP servers and its role in redefining AI interoperability.

By the end, you’ll understand not just what MCP is, but why it matters.

1. Why MOP Was Created

Before MCP, AI systems lacked a standard way to talk to external tools or data.

Every integration was custom-built. Each model handled its own logic for calling APIs, parsing results, and managing data flow. This made scaling nearly impossible.

To solve this, developers introduced MOP (Model Object Protocol), a foundational idea that allowed AI models to interact with structured “objects” in their environment.

MOP aimed to:

- Standardize how AI models accessed external data.

- Simplify tool usage by providing a predictable schema.

- Enable consistent communication between model and environment.

In essence, MOP gave AI models their first true interface with the outside world.

But as models evolved, MOP’s rigid design couldn’t keep up with the growing need for flexibility and real-time context.

2. Why MCP Replaced MOP

The limitations of MOP led to the birth of the Model Context Protocol (MCP), a new standard that focused on context, flexibility, and interoperability.

While traditional APIs define how applications communicate, MCP defines how AI models interact with tools, databases, and external systems.

Instead of replacing APIs, MCP builds on top of them.

You can think of MCP as a smart layer above APIs, one that helps models understand how to use tools intelligently.

With MCP:

- Models gain access to standardized tool definitions.

- Communication becomes consistent across AI systems.

- Developers no longer need custom connectors for every model.

MCP turns unstructured integrations into context-aware conversations.

3. Why MCP Servers Are Needed

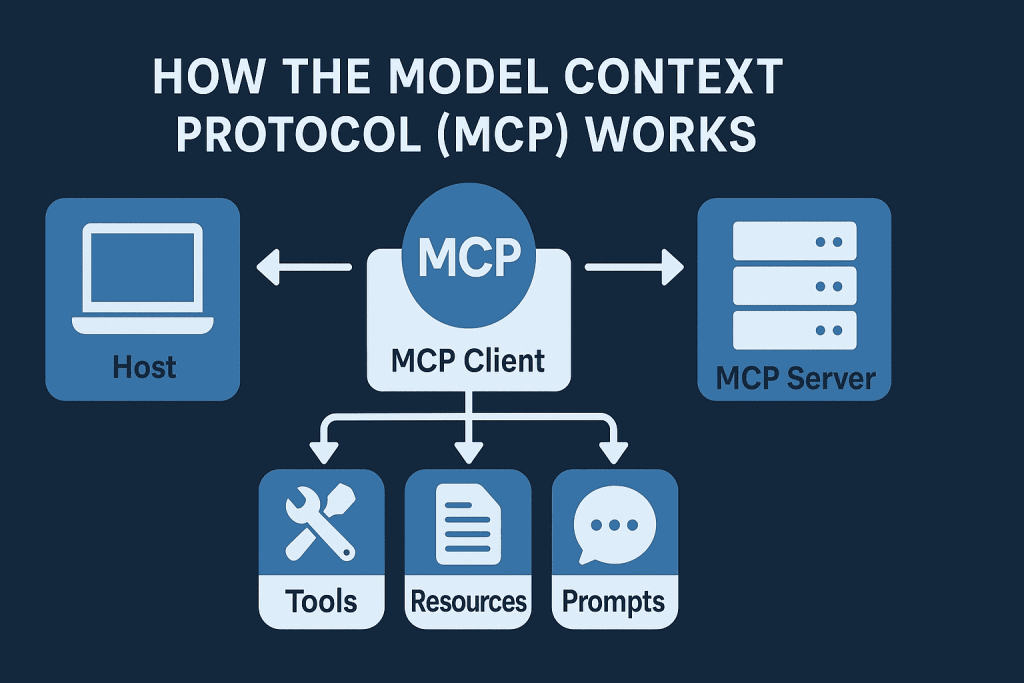

If MCP is the protocol, MCP servers are the backbone of the ecosystem.

They host the tools, define capabilities, and manage real-time communication between models and data sources.

MCP servers are needed because they:

- Provide centralized tool discovery and authentication.

- Allow AI agents to dynamically interact with live systems.

- Offer scalability and reliability across multiple data streams.

Each MCP server acts as a universal bridge, ensuring that any compliant model can discover, understand, and use the tools it provides.

This makes MCP servers essential for building modern AI applications that require live data, complex automation, or multi-agent coordination.

4. Why MCP Won (and Why It’s a Big Deal)

The AI industry has seen countless standards come and go, but MCP won for a few simple reasons:

- It solves the right problem.

MCP addresses the most critical bottleneck in AI, connecting reasoning models with the tools they need. - It’s model-agnostic.

MCP works with any AI model: OpenAI, Anthropic, Gemini, Mistral, and more. - It’s interoperable and composable.

Multiple MCP servers can communicate with one another, creating a recursive, intelligent network of connected agents. - It’s easy to implement.

Using modern SDKs likeHostedMCPTool, developers can set up a server within minutes.

Most importantly, MCP didn’t just improve how models use APIs, it redefined the relationship entirely.

MCP is to AI what APIs were to the web: a universal layer of understanding.

5. Who Created MCP (and Who Invented MOP)

The Model Object Protocol (MOP) was the first step, a developer-driven idea to make AI models tool-aware.

Later, Anthropic formally introduced MCP (Model Context Protocol) as an open standard for model-to-tool communication.

Their goal was clear: create a protocol that any AI model, regardless of vendor, could understand and use.

Unlike proprietary SDKs, MCP was designed to be open, collaborative, and extendable, encouraging global adoption and community-driven evolution.

Soon, other platforms including OpenAI’s Agents SDK, integrated MCP compatibility, accelerating its spread across the industry.

6. Why MCP Is Such a Big Deal

MCP’s real innovation lies in how it redefines the AI interaction model.

Old architecture:

AI Model → Custom Integration → API → Data Source

With MCP:

AI Model → MCP Server → Data Source

This new structure eliminates complexity, reduces latency, and improves context sharing.

It allows developers to focus on intelligence, not infrastructure.

Key advantages:

- Unified tool discovery

- Seamless data synchronization

- Plug-and-play multi-agent communication

- Model-agnostic interoperability

In short, MCP makes intelligent systems scalable, adaptable, and future-ready.

7. How MCP Changed the AI Ecosystem

Since its release, MCP has become a cornerstone of modern AI development.

Here’s how it’s reshaped the ecosystem:

- Real-time agents: Models now retrieve live, structured data directly through MCP servers.

- Multi-model orchestration: One MCP server can serve several AI models simultaneously.

- Plug-and-play tools: Developers can now expose any function or API as a reusable MCP service.

Platforms like MCPfy.ai have made this process accessible to everyone, offering practical resources, tutorials, and tools to help developers build and deploy their own MCP servers.

(Link suggestion: How the Model Context Protocol Works)

8. The Future of MCP

The future of the Model Context Protocol looks incredibly promising.

With every new AI model release, more vendors are adopting MCP support.

Upcoming innovations include:

- MCP-over-HTTP for faster server communication.

- Edge-based MCP servers for local processing.

- Global MCP registries for public tool discovery.

As this standard matures, we’ll see an era where:

Any AI model can call any tool, anywhere, instantly.

This level of interoperability will make MCP the foundation of intelligent infrastructure for years to come.

Conclusion

From MOP’s first iteration to MCP’s universal adoption, the evolution of these protocols tells a simple story, AI systems are becoming truly connected.

MOP taught models to use tools.

MCP taught them to understand context.

And as developers continue to push the boundaries of AI-to-tool integration, MCP stands not just as a technical achievement, but as the backbone of modern AI interoperability.

Key Takeaways

- MOP was the first step toward structured AI-tool interaction.

- MCP evolved from MOP to standardize model-to-tool communication.

- MCP servers enable scalable, real-time, multi-agent AI ecosystems.

- MCP’s open standard ensures interoperability across all major AI models.

- The future of MCP will define how AI systems collaborate and share context.